Conditional Variance

Conditional Variance¶

This section brings us back to the conditional expectation E(Y∣X), this time looking at it in relation to the scatter plot of points (X,Y) generated according to the joint distribution of X and Y. Deviations from this conditional mean lead to a definition of conditional variance, which can be a useful tool for finding variances of random variables that are defined in terms of other random variables.

Review of Conditional Expectation¶

In an earlier section we defined the conditional expectation E(Y∣X) as a function of X, as follows:

b(x) = E(Y∣X=x)We are using the letter b to signifiy the "best guess" of Y given the value of X. Later in this chapter we will make precise the sense in which it is the best.

In random variable notation, E(Y∣X) = b(X)

Recall that the properties of conditional expectations are analogous to those of expectation, but the identities are of random variables, not real numbers. There are also some additional properties due to the aspect of conditioning. We provide a list of the properties here for ease of reference.

- Linear transformation: E(aY+b∣X) = aE(Y∣X)+b

- Additivity: E(Y+W∣X) = E(Y∣X)+E(W∣X)

- "The given variable is a constant": E(g(X)∣X) = g(X)

- "Pulling out" constants: E(g(X)Y∣X) = g(X)E(Y∣X)

- Independence: If X and Y are independent then E(Y∣X)=E(Y), a constant.

The fundamental property that we have used most often is that of iteration:

E(b(X)) = E(E(Y∣X)) = E(Y)Therefore Var(b(X)) = E((b(X)−E(Y))2)

Vertical Strips¶

As an example, let X be standard normal, and let Y = X2+W where W is uniform on (−2,2) and is independent of X. Then by properties of conditional expectation,

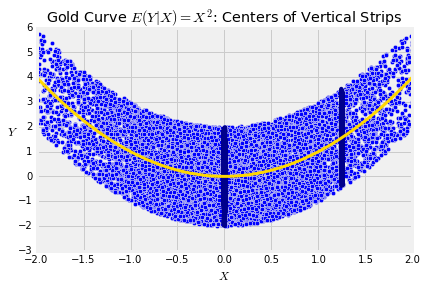

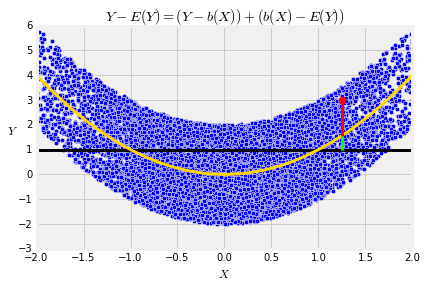

E(Y∣X) = E(X2∣X)+E(W∣X) = X2+E(W) = X2+0 = X2The graph below shows a scatter diagram of simulated (X,Y) points, along with the curve E(Y∣X)=X2. Notice how the curve picks off the centers of the vertical strips. Given a value of X, the conditional expectation E(Y∣X) is essentially (upto sampling variabliity) the average of the vertical strip at the given value of X.

Deviation from the Conditional Mean¶

As we said in Data 8, the points in each vertical strip form their own little data set, with their own mean. In probability language, the conditional distribution of Y given X=x has mean E(Y∣X=x).

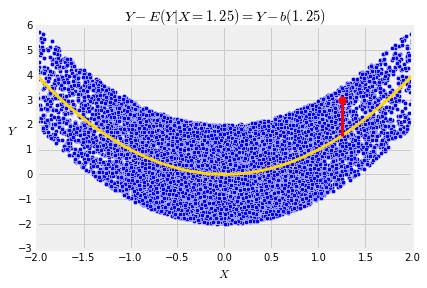

The deviation from the conditional mean given X=x is Y−E(Y∣X=x) = Y−b(x). An example of such a deviation is the length of the red segment in the graph below.

In random variable notation, this deviation from the mean of a random vertical strip becomes

Y−E(Y∣X) = Y−b(X)For any distribution, all of the deviations from average cancel each other out and have an average of 0. The calculation below establishes this for each vertical strip:

E(Y−b(X)∣X) = E(Y∣X)−b(X) = E(Y∣X)−E(Y∣X) = 0This result helps us understand the relation between X and the deviation Y−b(X) within the vertical strip at X. First, let g(X) be any function of X. Then

E((Y−b(X))g(X)∣X) = g(X)E(Y−b(X)∣X)=0So by iteration, the expected product of g(X) the deviation Y−b(X) is 0:

E((Y−b(X))g(X)) = E(E((Y−b(X))g(X)∣X)) = 0Because E(Y−b(X))=0, we see that the covariance between g(X) and the deviation is

Cov(g(X),Y−b(X)) = 0That is, the deviation from the conditional mean is uncorrelated with functions of X.

Conditional Variance¶

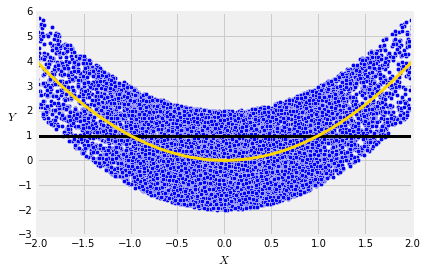

In terms of the scatter plot, the familiar quantity Var(Y)=E((Y−E(Y))2) is the mean squared distance between the points and the horizontal line at level E(Y).

In our example, Y=X2+W where X is standard normal and W is uniform on (−2,2). So E(Y)=E(X2)=1 because X2 has the chi-squared (1) distribution.

Clearly, the rough size of the deviations from the flat line at E(Y) is greater than the rough size of the deviation from the conditional expectation curve E(Y∣X).

To quantify this, we will first define the conditional variance of Y given X as

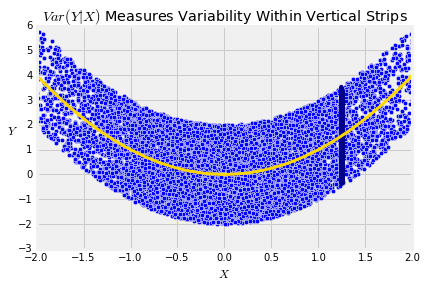

Var(Y∣X) = E((Y−E(Y∣X))2∣X) = E((Y−b(X))2∣X)To understand this definition, keep in mind that being "given X" is the same as working within the vertical strip at a random value on the horizontal axis chosen according to the distribution of X. The conditional distribution of Y in that strip has a variance. That's Var(Y∣X).

Now let's see how variance and conditional variance are related to each other.

Variance by Conditioning¶

The vertical distance between a point and the flat line at E(Y) is thus sum of two distances: the vertical distance between the point and the curve E(Y∣X), and the distance between the curve and the flat line.

Y−E(Y) = (Y−E(Y∣X))+(E(Y∣X)−E(Y) = (Y−b(X))+(b(X)−E(Y))The first term on the right hand side is the red segment and the second term is the green segment right below it.

Now we can see how the variance of Y breaks down, using these two pieces.

Var(Y) = E((Y−E(Y))2)= E(((Y−b(X))+(b(X)−E(Y)))2)= E((Y−b(X))2)+E((b(X)−E(Y))2)+2E((Y−b(X))(b(X)−E(Y)))= E((Y−b(X))2)+Var(b(X))The cross product term is 0 because b(X)−E(Y) is a function of X (remember that E(Y) is a constant) and the deviation Y−b(X) is uncorrelated with functions of X.

Thus we have shown:

Variance is the sum of the expectation of the conditional variance and the variance of the conditional expectation.

It makes sense that the two quantities on the right hand side are involved. The variability of Y has two components:

- the rough size of the variability within the individual vertical strips, that is, the expectation of the conditional variance

- the variability between strips, measured by the variance of the centers of the strips.

The beautiful property of variance is that you can just add the two terms to get the unconditional variance.

We know that the lengths of the red and green segments are uncorrelated; the deviation Y−b(X) is uncorrelated with b(X)−E(Y). What the result is saying is that the square of the sum of the two distances is the sum of their squares. There is a precise analogy between this result and Pythagoras' Theorem, with "uncorrelated" replacing "orthogonal". In later sections we will get a better sense of this analogy. The geometry requires an understanding of orthogonal vectors and is well worth studying.