Sums of IID Samples

After the dry, algebraic discussion of the previous section it is a relief to finally be able to compute some variances.

Let X1,X2,…Xn be random variables with sum Sn=∑ni=1Xi The variance of the sum is

Var(Sn)=Cov(Sn,Sn)=n∑i=1n∑j=1Cov(Xi,Xj) (bilinearity)=n∑i=1Var(Xi)+∑∑1≤i≠j≤nCov(Xi,Xj)We say that the variance of the sum is the sum of all the variances and all the covariances.

If X1,X2…,Xn are independent, then all the covariance terms in the formula above are 0.

Therefore if X1,X2,…,Xn are independent then Var(Sn)=∑ni=1Var(Xi)

Thus for independent random variables X1,X2,…,Xn, both the expectation and the variance add up nicely:

E(Sn)=n∑i=1E(Xi), Var(Sn)=n∑i=1Var(Xi)When the random variables are i.i.d., this simplifies even further.

Sum of an IID Sample

Let X1,X2,…,Xn be i.i.d., each with mean μ and SD σ. You can think of X1,X2,…,Xn as draws at random with replacement from a population, or the results of independent replications of the same experiment.

Let Sn be the sample sum, as above. Then

E(Sn)=nμ Var(Sn)=nσ2 SD(Sn)=√nσThis implies that as the sample size n increases, the distribution of the sum Sn shifts to the right and is more spread out.

Here is one of the most important applications of these results.

Variance of the Binomial

Let X have the binomial (n,p) distribution. We know that X=∑ni=1Ij where I1,I2,…,In are i.i.d. indicators, each taking the value 1 with probability p. Each of these indicators has expectation p and variance pq=p(1−p). Therefore

E(X)=np Var(X)=npq SD(X)=√npqFor example, if X is the number of heads in 100 tosses of a coin, then

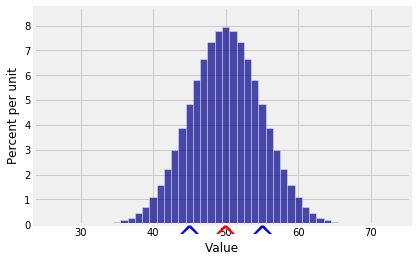

E(X)=100×0.5=50 SD(X)=√100×0.5×0.5=5Here is the distribution of X. You can see that there is almost no probability outside the range E(X)±3SD(X).

k = np.arange(25, 75, 1)

binom_probs = stats.binom.pmf(k, 100, 0.5)

binom_dist = Table().values(k).probability(binom_probs)

Plot(binom_dist, show_ev=True, show_sd=True)