Bivariate Normal Distribution

The multivariate normal distribution is defined in terms of a mean vector and a covariance matrix. The units of covariance are often hard to understand, as they are the product of the units of the two variables.

Normalizing the covariance so that it is easier to interpret is a good idea. As you have seen in exercises, for jointly distributed random variables X and Y the correlation between X and Y is defined as

rX,Y = Cov(X,Y)σXσY = E(X−μXσX⋅Y−μYσY) = E(X∗Y∗)where X∗ is X in standard units and Y∗ is Y in standard units.

Properties of Correlation

You showed all of these in exercises.

- rX,Y depends only on standard units and hence is a pure number with no units

- rX,Y=rY,X

- −1≤rX,Y≤1

- If Y=aX+b then rX,Y is 1 or −1 according to whether the sign of a is positive or negative.

We say that rX,Y measures the linear association between X and Y.

Variance of a Sum

Rewrite the formula for correlation to see that

Cov(X,Y) = rX,YσXσYSo the variance of X+Y is

σ2X+Y = σ2X+σ2Y+2rX,YσXσYNotice the parallel with the formula for the length of the sum of two vectors, with correlation playing the role of the cosine of the angle between two vectors. If the angle is 90 degrees, the the cosine is 0. This corresponds to correlation being zero and hence the random variables being uncorrelated.

We will visualize this idea in the case where the joint distribution of X and Y is bivariate normal.

Standard Bivariate Normal Distribution

Let X and Z be independent standard normal variables, that is, bivariate normal random variables with mean vector 0 and covariance matrix equal to the identity. Now fix a number ρ (that’s the Greek letter rho, the lower case r) so that −1<ρ<1, and let

A = [10ρ√1−ρ2]Define a new random variable Y=ρX+√1−ρ2Z, and notice that

[XY] = [10ρ√1−ρ2][XZ] = A[XZ]So X and Y have the bivariate normal distribution with mean vector 0 and covariance matrix

AIAT = [10ρ√1−ρ2][1ρ0√1−ρ2] = [1ρρ1]We say that X and Y have the standard bivariate normal distribution with correlation ρ.

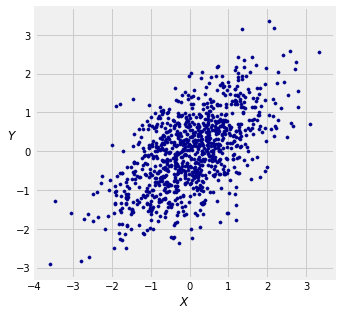

The graph below shows the empirical distribution of 1000 (X,Y) points in the case ρ=0.6. You can change the value of rho and see how the scatter diagram changes. It will remind you of numerous such simulations in Data 8.

# Plotting parameters

plt.figure(figsize=(5, 5))

plt.axes().set_aspect('equal')

plt.xlabel('$X$')

plt.ylabel('$Y$', rotation=0)

plt.xticks(np.arange(-4, 4.1))

plt.yticks(np.arange(-4, 4.1))

# X, Z, and Y

x = stats.norm.rvs(0, 1, size=1000)

z = stats.norm.rvs(0, 1, size=1000)

rho = 0.6

y = rho*x + np.sqrt((1-rho**2))*z

plt.scatter(x, y, color='darkblue', s=10);

Correlation as a Cosine

We have defined

Y = ρX+√1−ρ2Z where X and Z are i.i.d. standard normal.

Let’s understand this construction geometrically. A good place to start is the joint density of X and Z, which has circular symmetry.

The X and Z axes are orthogonal. Let’s see what happens if we twist them.

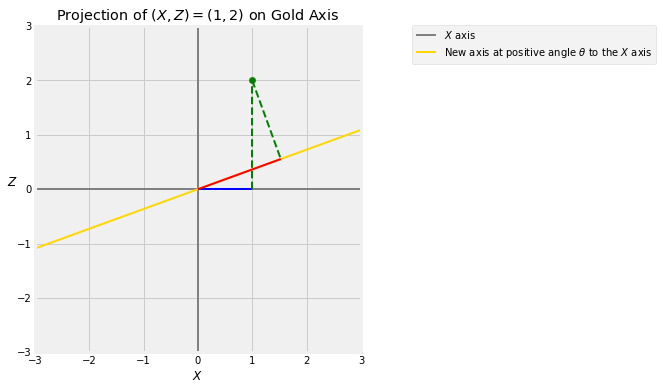

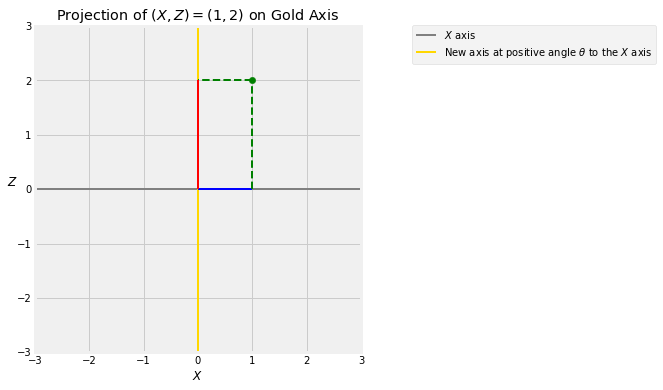

Take any positive angle θ degrees and draw a new axis at angle θ to the original X axis. Every point (X,Z) has a projection onto this axis.

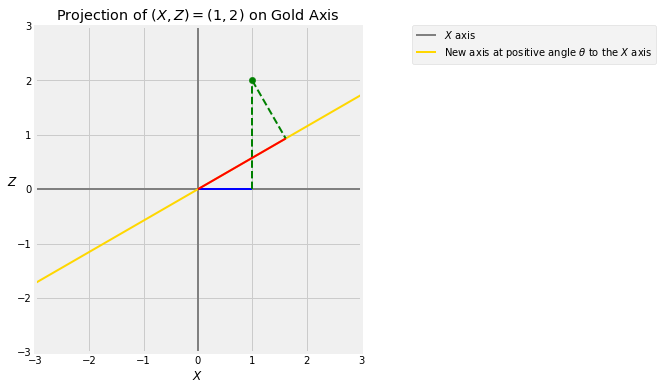

The figure below shows the projection of the point (X,Z)=(1,2) onto the gold axis which is at an angle of θ degress to the X axis. The blue segment is the value of X. You get that by dropping the perpendicular from (1,2) to the horizontal axis. That’s called projecting (1,2) onto the horizontal axis.

The red segment is the projection of (1,2) onto the gold axes, obtained by dropping the perpendicular from (1,2) to the gold axis.

Vary the values of θ in the cell below to see how the projection changes as the gold axis rotates.

theta = 20

projection_1_2(theta)

Let Y be the length of the red segment, and remember that X is the length of the blue segment. When θ is very small, Y is almost equal to X. When θ approaches 90 degrees, Y is almost equal to Z.

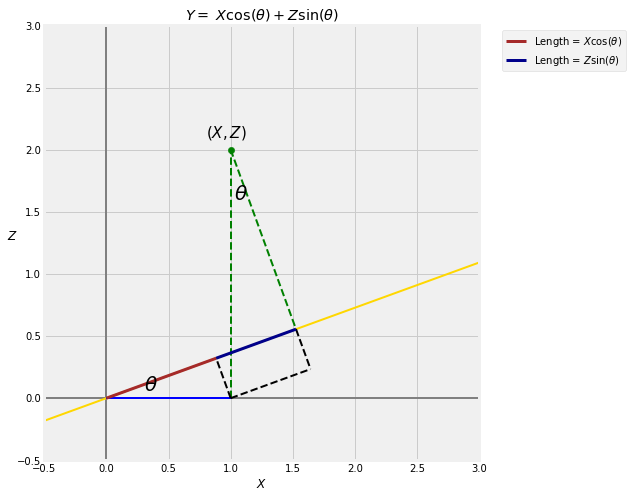

A little trigonometry shows that Y = Xcos(θ)+Zsin(θ).

projection_trig()

Thus

Y = Xcos(θ)+Zsin(θ) = ρX+√1−ρ2Zwhere ρ=cos(θ).

The sequence of graphs below illustrates the transformation for θ=30 degrees.

theta = 30

projection_1_2(theta)

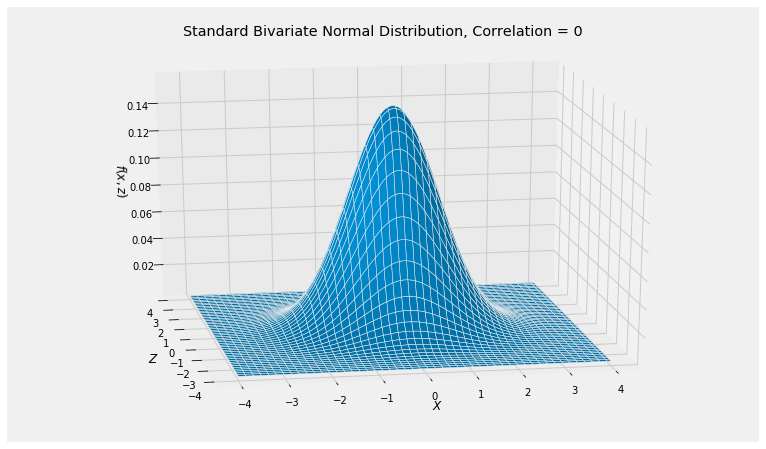

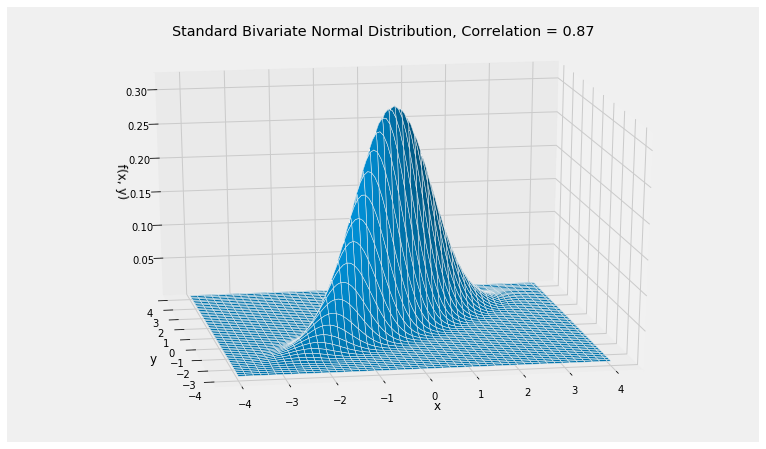

The bivariate normal distribution is the joint distribution of the blue and red lengths X and Y when the original point (X,Z) has i.i.d. standard normal coordinates. This transforms the circular contours of the joint density surface of (X,Z) into the elliptical contours of the joint density surface of (X,Y).

cos(theta), (3**0.5)/2

(0.8660254037844387, 0.8660254037844386)

rho = cos(theta)

Plot_bivariate_normal([0, 0], [[1, rho], [rho, 1]])

plt.title('Standard Bivariate Normal Distribution, Correlation = '+str(round(rho, 2)));

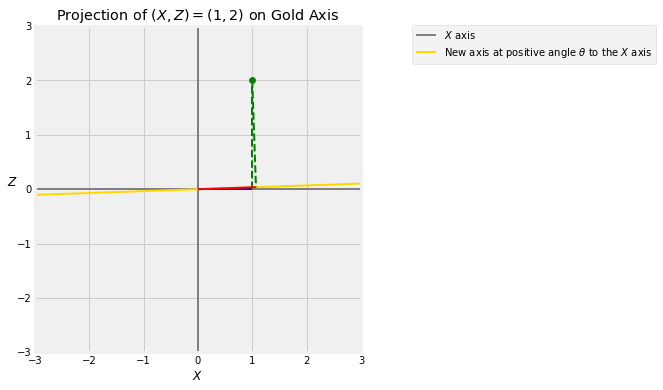

Small θ

As we observed earlier, when θ is very small there is hardly any change in the position of the axis. So X and Y are almost equal.

theta = 2

projection_1_2(theta)

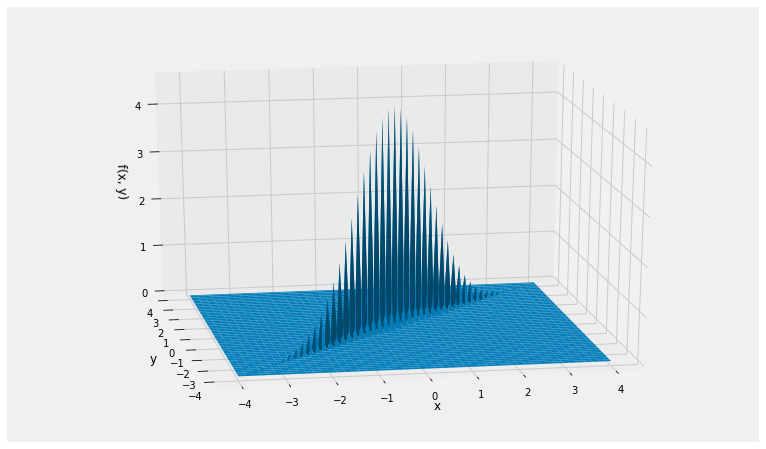

The bivariate normal density of X and Y, therefore, is essentially confined to the X=Y line. The correlation cos(θ) is large because θ is small; it is more than 0.999.

You can see the plotting function having trouble rendering this joint density surface.

rho = cos(theta)

rho

0.99939082701909576

Plot_bivariate_normal([0, 0], [[1, rho], [rho, 1]])

Orthogonality and Independence

When θ is 90 degrees, the gold axis is orthogonal to the X axis and Y is equal to Z which is independent of X.

theta = 90

projection_1_2(theta)

When θ=90 degrees, cos(θ)=0. The joint density surface of (X,Y) is the same as that of (X,Z) and has circular symmetry.

If you think of ρX as a “signal” and √1−ρ2Z as “noise”, then Y can be thought of as an observation whose value is “signal plus noise”. In the rest of the chapter we will see if we can separate the signal from the noise.

Representations of the Bivariate Normal

When we are working with just two variables X and Y, matrix representations are often unnecessary. We will use the following three representations interchangeably.

- X1 and X2 are bivariate normal with parameters (μ1,μ2,σ21,σ22,ρ)

- The standardized variables X∗1 and X∗2 are standard bivariate normal with correlation ρ. Then X∗2=ρX∗1+√1−ρ2Z for some standard normal Z that is independent of X∗1. This follows from Definition 2 of the multivariate normal.

- X1 and X2 have the multivariate normal distribution with mean vector [μ1 μ2]T and covariance matrix