Chi-Squared Distributions

Let Z be a standard normal random variable and let V=Z2. By the change of variable formula for densities, we found the density of V to be

fV(v) = 1√2πv−12e−12v, v>0That’s the gamma (1/2,1/2) density. It is also called the chi-squared density with 1 degree of freedom, which we will abbreviate to chi-squared (1).

From Chi-Squared (1) to Chi-Squared (n)

When we were establishing the properties of the standard normal density, we discovered that if Z1 and Z2 are independent standard normal then Z21+Z22 has the exponential (1/2) distribution. We saw this by comparing two different settings in which the Rayleigh distribution arises. But that wasn’t a particularly illuminating reason for why Z21+Z22 should be exponential.

But now we know that the sum of independent gamma variables with the same rate is also gamma; the shape parameter adds up and the rate remains the same. Therefore Z21+Z22 is a gamma (1,1/2) variable. That’s the same distribution as exponential (1/2), as you showed in exercises. This explains why the sum of squares of two i.i.d. standard normal variables has the exponential (1/2) distribution.

Now let Z1,Z2,…,Zn be i.i.d. standard normal variables. Then Z21,Z22,…,Z2n are i.i.d. chi-squared (1) variables. That is, each of them has the gamma (1/2,1/2) distribution.

By induction, Z21+Z22+⋯+Z2n has the gamma (n/2,1/2) distribution. This is called the chi-squared distribution with n degrees of freedom, which we will abbreviate to chi-squared (n).

Chi-Squared with n Degrees of Freedom

For a positive integer n, the random variable X has the chi-squared distribution with n degrees of freedom if the distribution of X is gamma (n/2,1/2). That is, X has density

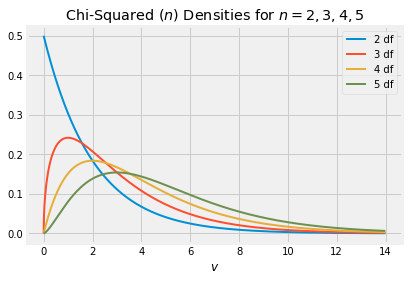

fX(x) = 12n2Γ(n2)xn2−1e−12x, x>0Here are the graphs of the chi-squared densities for degrees of freedom 2 through 5.

The chi-squared (2) distribution is exponential because it is the gamma (1,1/2) distribution. This distribution has three names:

- chi-squared (2)

- gamma (1, 1/2)

- exponential (1/2)

Mean and Variance

You know that if T has the gamma (r,λ) density then

E(T) = rλ SD(T)=√rλIf X has the chi-squared (n) distribution then X is gamma (n/2,1/2). So

E(X) = n/21/2 = nThus the expectation of a chi-squared random variable is its degrees of freedom.

The SD is SD(X) = √n/21/2 = √2n

Estimating the Normal Variance

Suppose X1,X2,…,Xn are i.i.d. normal (μ,σ2) variables, and that you are in a setting in which you know μ and are trying to estimate σ2.

Let Zi be Xi in standard units, so that Zi=(Xi−μ)/σ. Define the random variable T as follows:

T = n∑i=1Z2i = 1σ2n∑i=1(Xi−μ)2Then T has the chi-squared (n) distribution and E(T)=n. Now define W by

W = σ2nT = 1nn∑i=1(Xi−μ)2Then W can be computed based on the sample since μ is known. And since W is a linear tranformation of T it is easy to see that E(W)=σ2.

So we have constructed an unbiased estimate of σ2. It is the mean squared deviation from the known population mean.

But typically, μ is not known. In that case you need a different estimate of σ2 since you can’t compute W as defined above. You showed in exercises that

S2 = 1n−1n∑i=1(Xi−ˉX)2is an unbiased estimate of σ2 regardless of the distribution of the Xi’s. When the Xi’s are normal, as is the case here, it turns out that S2 is a linear transformation of a chi-squared (n−1) random variable. The methods of the next chapter can used to understand why.

“Degrees of Freedom”

The example above helps explain the strange term “degrees of freedom” for the parameter of the chi-squared distribution.

- When μ is known, you have n independent centered normals (Xi−μ) that you can use to estimate σ2. That is, you have n degrees of freedom in constructing your estimate.

- When μ is not known, you are using all n of X1−ˉX,X2−ˉX,…,Xn−ˉX in your estimate, but they are not independent. They are the deviations of the list X1,X2,…,Xn from their average ˉX, and hence their sum is 0. If you know n−1 of them, the final one is determined. So you only have n−1 degrees of freedom.