8.2. Applying the Definition#

Now that we have a few ways to think about expectation, let’s see why it has such fundamental importance. We will start by directly applying the definition to calculate some expectations. In subsequent sections we will develop more powerful methods to calculate and use expectation.

8.2.1. Constant#

This little example is worth writing out because it gets used all the time. Suppose a random variable

8.2.2. Bernoulli and Indicators#

If

As you saw earlier, zero/one valued random variables are building blocks for other variables and are called indicators.

Let

by our calculation above. Thus every probability is an expectation. We will use this heavily in later sections.

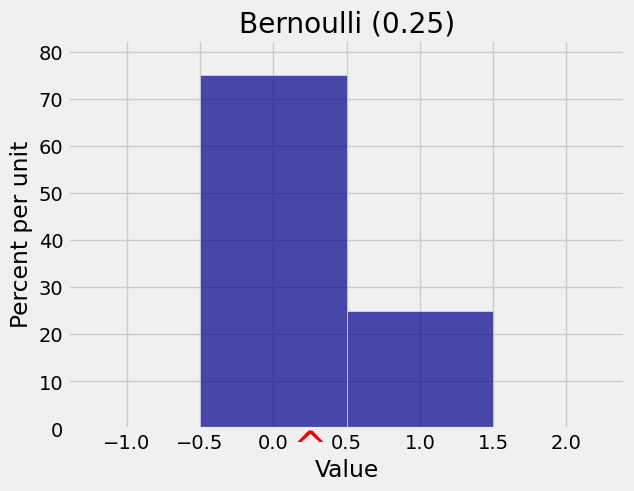

x = [0, 1]

qp = [0.75, 0.25]

bern_1_3 = Table().values(x).probabilities(qp)

Plot(bern_1_3, show_ev=True)

plt.title('Bernoulli (0.25)');

Quick Check

Three coins are tossed. Let

Answer

8.2.3. Uniform on an Interval of Integers#

Let

For example, if

An instance of this is if

If instead

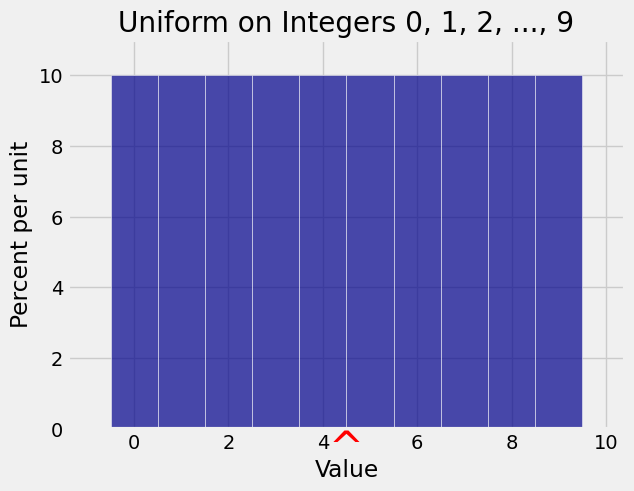

x = np.arange(10)

probs = 0.1*np.ones(10)

unif_10 = Table().values(x).probabilities(probs)

Plot(unif_10, show_ev=True)

plt.title('Uniform on Integers 0, 1, 2, ..., 9');

Quick Check

Let

(i)

(ii)

(iii)

Answer

(ii)

See More

8.2.4. Poisson#

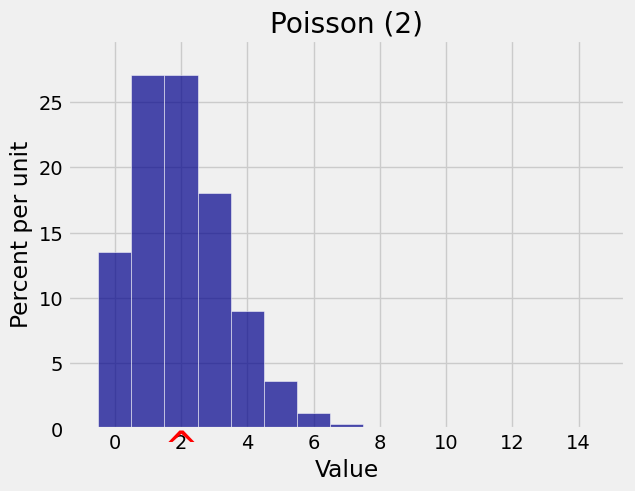

Let

We now have an important new interpretation of the parameter of the Poisson distribution. We saw earlier it was close to the mode; now we know that it is also the balance point or expectation of the distribution. The notation

k = np.arange(15)

poi_2_probs = stats.poisson.pmf(k, 2)

dist_poi_2 = Table().values(k).probabilities(poi_2_probs)

Plot(dist_poi_2, show_ev=True)

plt.title('Poisson (2)');

Quick Check

Let

(a) If you simulate

(b) The most likely value of

(c)

Answer

(a) True

(b) True

(c) False

See More

8.2.5. Tail Sum Formula#

To find the expectation of a non-negative integer valued random variable it is sometimes quicker to use a formula that uses only the right hand tail probabilities

For non-negative integer valued

Rewrite this as

Add the terms along each column on the right hand side to get the tail sum formula for the expectation of a non-negative integer valued random variable.

This formula comes in handy if a random variable has tail probabilities that are easy to find and also easy to sum.

8.2.6. Geometric#

In a sequence of i.i.d. Bernoulli

Let

This is called the geometric

The right tails of

The formula is also true for

By the tail sum formula,

See More

Quick Check

Find the expectations of the following random variables.

(a) The number of rolls of a die till the face with six spots appears

(b) The number of rolls of a die till a face with more than four spots appears

(c) The number of times a coin is tossed till it lands heads

Answer

(a) 6

(b) 3

(c) 2