14.5. The Sample Mean#

What’s central about the Central Limit Theorem? One answer is that it allows us to make inferences based on random samples when we don’t know much about the distribution of the population. For data scientists, that’s very valuable.

In Data 8 you saw that if we want estimate the mean of a population, we can construct confidence intervals for the parameter based on the mean of a large random sample. In that course you used the bootstrap to generate an empirical distribution of the sample mean, and then used the empirical distribution to create the confidence interval. You will recall that those empirical distributions were invariably bell shaped.

In this section we will study the probability distribution of the sample mean and show that you can use it to construct confidence intervals for the population mean without any resampling.

Let’s start with the sample sum, which we now understand well. Recall our assumptions and notation:

Let

These results imply that as the sample size increases, the distribution of the sample sum moves to the right and becomes more spread out.

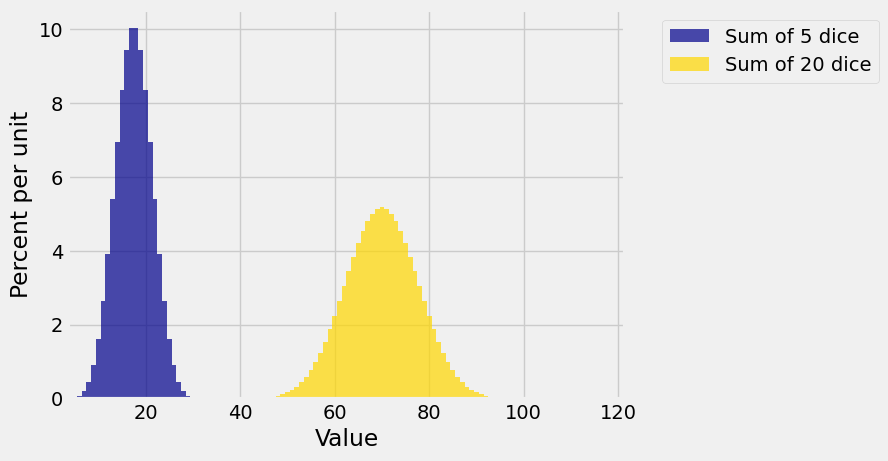

You can see this in the graph below. The graph shows the distributions of the sum of 5 rolls and the sum of 20 rolls of a die. The distributions are exact, calculated using the function dist_sum defined using pgf methods earlier in this chapter.

die = np.append(0, (1/6)*np.ones(6))

dist_sum_5 = dist_sum(5, die)

dist_sum_20 = dist_sum(20, die)

Plots('Sum of 5 dice', dist_sum_5, 'Sum of 20 dice', dist_sum_20)

You can see the normal distribution appearing already for the sum of 5 and 20 dice.

The gold histogram is more spread out than the blue. Because both are probability histograms, both have 1 as their total area. So the gold histogram has to be lower than the blue.

Now let’s look at the spread more carefully.

You can see also that the gold distribution isn’t four times as spread out as the blue, though the sample size in the gold distribution is four times that of the blue. The gold distribution is twice as spread out (and therefore half as tall) as the blue. That is because the SD of the sum is proportional to

The average of the sample behaves differently.

See More

14.5.1. The Mean of an IID Sample#

Let

Then

The expectation of the sample mean is always the underlying population mean

The SD of the sample mean is

The variability of the sample mean decreases as the sample size increases. So, as the sample size increases, the sample mean becomes a more accurate estimator of the population mean.

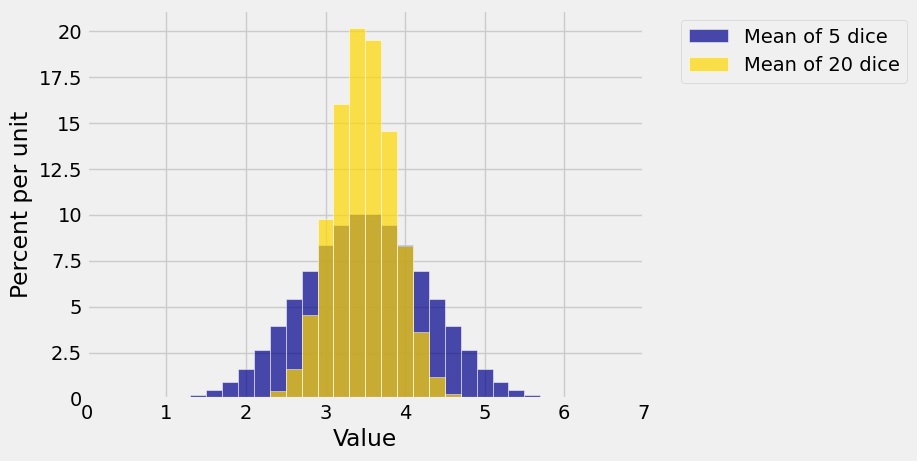

The graph below shows the distributions of the means of 5 rolls of a die and of 20 rolls. Both are centered at 3.5 but the distribution of the mean of the larger sample is narrower. You saw this frequently in Data 8: as the sample size increases, the distribution of the sample mean gets more concentrated around the population mean.

14.5.2. Square Root Law#

Accuracy doesn’t come cheap. The SD of the i.i.d. sample mean decreases according to the square root of the sample size. Therefore if you want to decrease the SD of the sample mean by a factor of 3, you have to increase the sample size by a factor of

The general result is usually stated in the reverse.

Square Root Law: If you multiply the sample size by a factor, then the SD of the i.i.d. sample mean decreases by the square root of the factor.

See More

14.5.3. Weak Law of Large Numbers#

The sample mean is an unbiased estimator of the population mean and has a small SD when the sample size is large. So the mean of a large sample is close to the population mean with high probability.

The formal result is called the Weak Law of Large Numbers.

Let

That is, for large

To prove the law, we will show that

In the terminology of probability theory, the result above is the same as saying that the i.i.d. sample mean converges in probability to the population mean. In the terminology of statistical inference, the result says that the i.i.d. sample mean is a consistent estimator of the population mean.

Quick Check

Answer

5 by the Weak Law

14.5.5. The Shape of the Distribution#

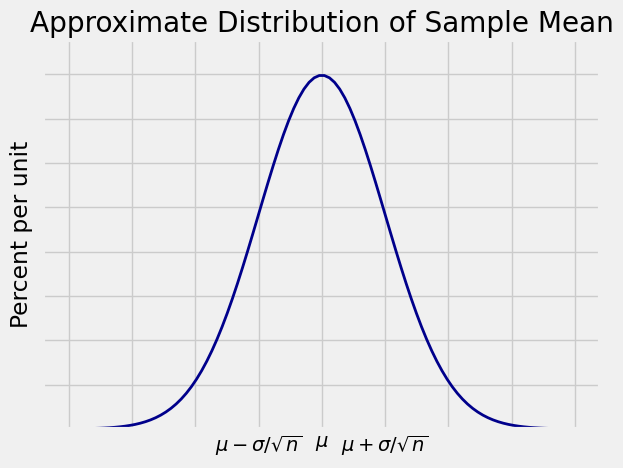

The Central Limit Theorem tells us that for large samples, the distribution of the sample mean is roughly normal. The sample mean is a linear transformation of the the sample sum. So if the distribution of the sample sum is roughly normal, the distribution of the sample mean is roughly normal as well, though with different parameters. Specifically, for large

The expectation stays the same no matter what