17.2. Independence#

Informally, the definition of independence is the same as before: two random variables that have a joint density are independent if additional information about one of them doesn’t change the distribution of the other.

One quick way to spot the lack of independence is to look at the set of possible values of the pair

Quick Check

The “unit disc” is the disc of radius 1 centered at the origin. That is, it’s the set of points

(i) It is not possible to determine whether or not

(ii)

(iii)

Answer

(iii)

See More

If the set of possible values is rectangular then you have to check independence using the old definition:

Jointly distributed random variables

for all intervals

Let

Thus if

This is the product rule for densities: the joint density of two independent random variables is the product of their densities.

The converse is also true: if the joint density factors into a function of

17.2.1. Independent Standard Normal Random Variables#

Suppose

Equivalently,

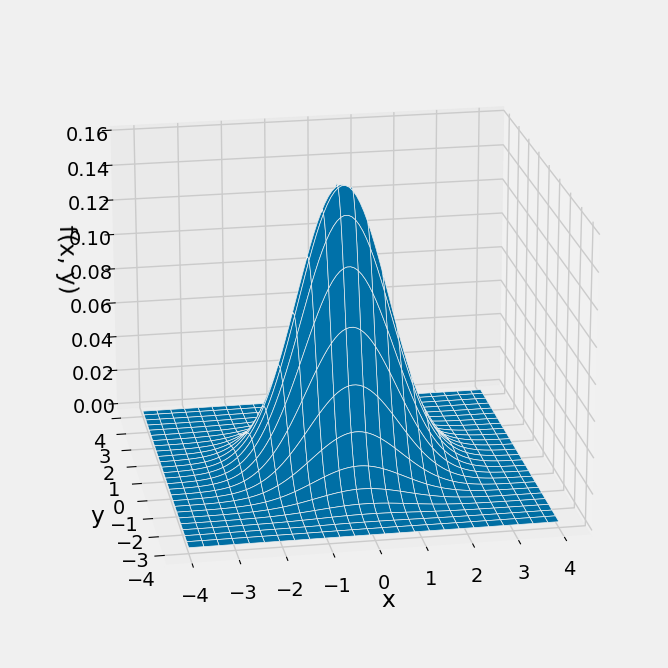

Here is a graph of the joint density surface.

def indep_standard_normals(x,y):

return 1/(2*math.pi) * np.exp(-0.5*(x**2 + y**2))

Plot_3d((-4, 4), (-4, 4), indep_standard_normals, rstride=4, cstride=4)

Notice the circular symmetry of the surface. This is because the formula for the joint density involves the pair

Notice also that

Quick Check

Answer

Quick Check

For some positive constant

Answer

Yes

17.2.2. Competing Exponentials#

Let

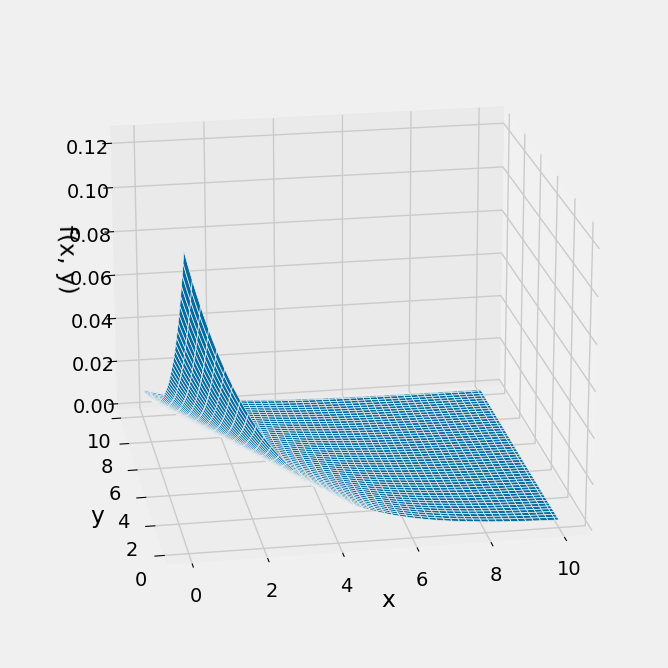

By the product rule, the joint density of

The graph below shows the joint density surface in the case

def independent_exp(x, y):

return 0.5 * 0.25 * np.e**(-0.5*x - 0.25*y)

Plot_3d((0, 10), (0, 10), independent_exp)

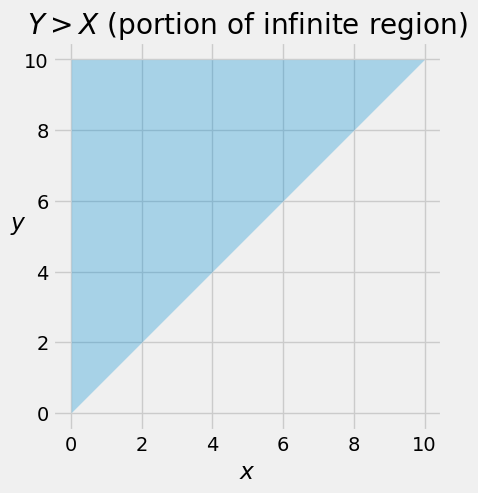

To find

The probability is therefore

We can do this double integral without much calculus, just by using probability facts. As you calculate, try to involve densities as much as possible, and remember that the integral of a density over an interval is the probability of that interval.

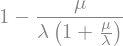

Thus

Analogously,

Notice that the two chances are proportional to the parameters. This is consistent with intuition if you think of

If

Quick Check

The lifetimes of two electrical components are independent. The lifetime of Component 1 has the exponential

Without calculation, pick the correct option: The chance that Component 1 lives longer than Component 2 is

(i) greater than

(ii) equal to

(iii) less than

Answer

(i)

Quick Check

Find the chance in Quick Check above.

Answer

If we had attempted the double integral in the other order – first

Let’s take the easy way out by using SymPy to confirm that we will get the same answer.

# Create the symbols; they are all positive

x = Symbol('x', positive=True)

y = Symbol('y', positive=True)

lamda = Symbol('lamda', positive=True)

mu = Symbol('mu', positive=True)

# Construct the expression for the joint density

f_X = lamda * exp(-lamda * x)

f_Y = mu * exp(-mu * y)

joint_density = f_X * f_Y

joint_density

# Display the integral – first x, then y

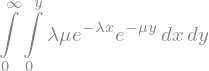

Integral(joint_density, (x, 0, y), (y, 0, oo))

# Evaluate the integral

answer = Integral(joint_density, (x, 0, y), (y, 0, oo)).doit()

answer

# Confirm that it is the same

# as what we got by integrating in the other order

simplify(answer)