17.3. Marginal and Conditional Densities#

See More

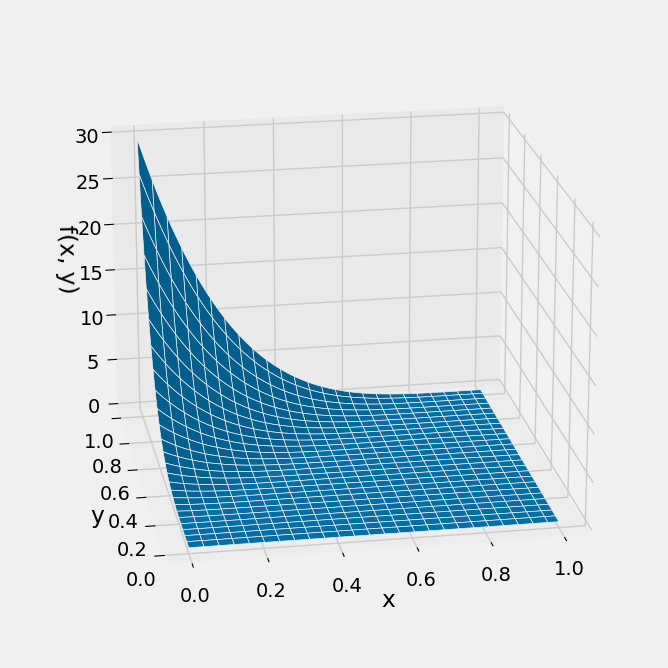

Let random variables \(X\) and \(Y\) have the joint density defined by

def jt_dens(x,y):

if y < x:

return 0

else:

return 30 * (y-x)**4

Plot_3d(x_limits=(0,1), y_limits=(0,1), f=jt_dens, cstride=4, rstride=4)

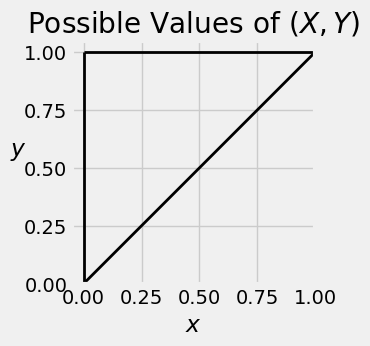

Then the possible values of \((X, Y)\) are in the upper right hand triangle of the unit square.

Here is a quick check by SymPy to see that the function \(f\) is indeed a joint density.

x = Symbol('x', positive=True)

y = Symbol('y', positive=True)

joint_density = 30*(y-x)**4

Integral(joint_density, (y, x, 1), (x, 0, 1)).doit()

17.3.1. Marginal Density of \(X\)#

We can use the joint density \(f\) to find the density of \(X\). Call this density \(f_X\). We know that

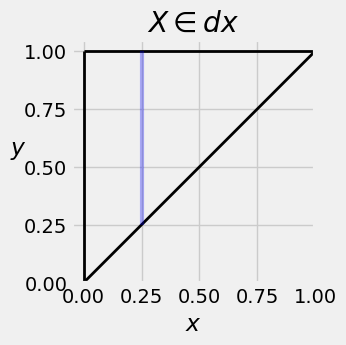

You can see the reasoning behind this calculation in the graph below. The blue strip shows the event \(\{ X \in dx \}\) for a value of \(x\) very near 0.25. To find the volume \(P(X \in dx)\), we hold \(x\) fixed and add over all \(y\).

<>:15: SyntaxWarning: invalid escape sequence '\i'

<>:15: SyntaxWarning: invalid escape sequence '\i'

/var/folders/g7/vpsqt6yn74z6qv_c_2x7jsg80000gn/T/ipykernel_16330/4176870377.py:15: SyntaxWarning: invalid escape sequence '\i'

plt.title('$X \in dx$');

So the density of \(X\) is given by

By analogy with the discrete case, \(f_X\) is sometimes called the marginal density of \(X\).

In our example, the possible values of \((X, Y)\) are the upper left hand triangle as shown above. So for each fixed \(x\), the possible values of \(Y\) go from \(x\) to 1.

Therefore for \(0 < x < 1\), the density of \(X\) is given by

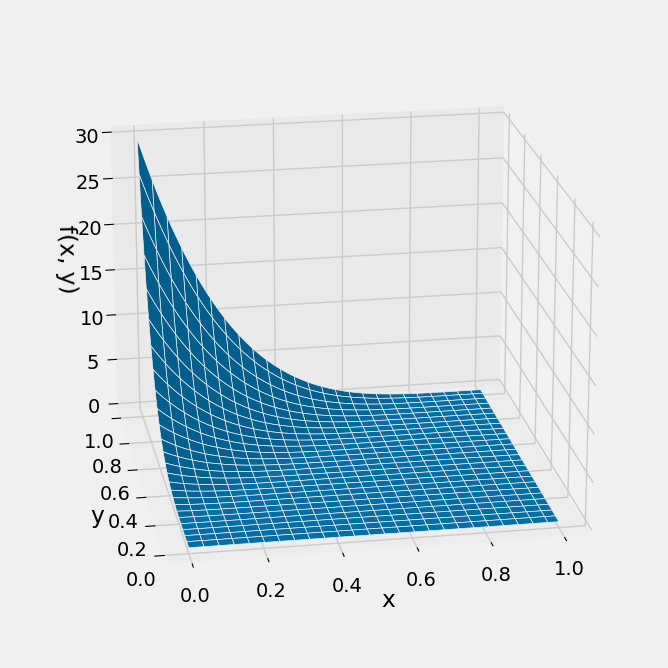

Here is the joint density surface again. You can see that \(X\) is much more likely to be near 0 than near 1.

Plot_3d(x_limits=(0,1), y_limits=(0,1), f=jt_dens, cstride=4, rstride=4)

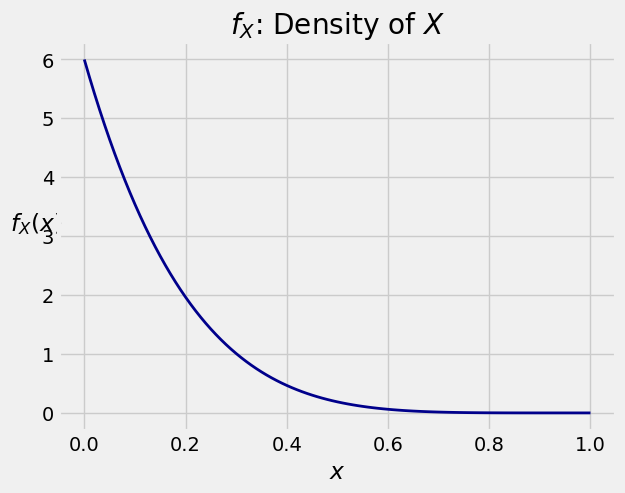

That can be seen in the shape of the density of \(X\).

17.3.2. Density of \(Y\)#

Correspondingly, the density of \(Y\) can be found by fixing \(y\) and integrating over \(x\) as follows:

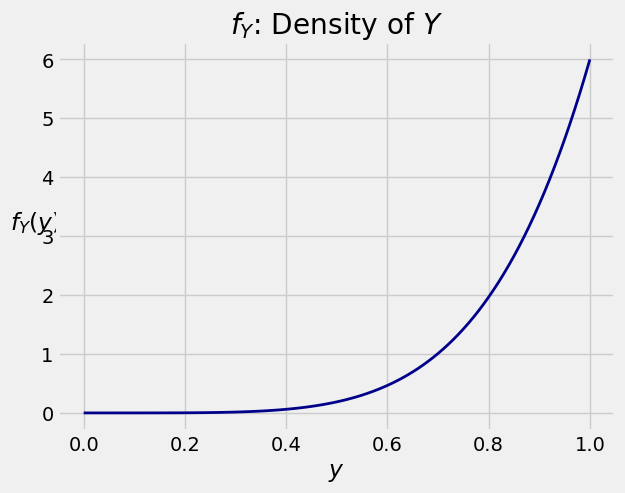

In our example, the joint density surface indicates that \(Y\) is more likely to be near 1 than near 0, which is confirmed by calculation. Remember that \(y > x\) and therefore for each fixed \(y\), the possible values of \(x\) are 0 through \(y\).

For \(0 < y < 1\),

See More

17.3.3. Conditional Densities#

Consider the conditional probability \(P(Y \in dy \mid X \in dx)\). By the division rule,

This gives us a division rule for densities. For a fixed value \(x\), the conditional density of \(Y\) given \(X=x\) is defined by

Since \(X\) has a density, we know that \(P(X = x) = 0\) for all \(x\). But the ratio above is of densities, not probabilities. It might help your intuition to think of “given \(X=x\)” to mean “given that \(X\) is just around \(x\)”.

Visually, the shape of this conditional density is the vertical cross section at \(x\) of the joint density graph above. The numerator determines the shape, and the denominator is part of the constant that makes the density integrate to 1.

Note that \(x\) is constant in this formula; it is the given value of \(X\). So the denominator \(f_X(x)\) is the same for all the possible values of \(y\).

To see that the conditional density does integrate to 1, let’s do the integral.

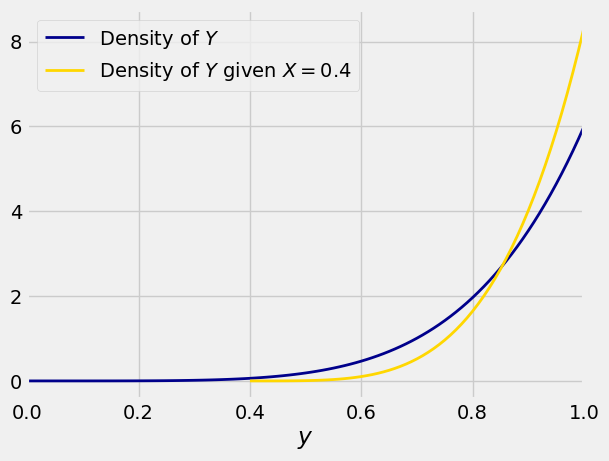

In our example, let \(x = 0.4\) and consider finding the conditional density of \(Y\) given \(X = 0.4\). Under that condition, the possible values of \(Y\) are in the range 0.4 to 1, and therefore

This is a density on \((0.4, 1)\):

y = Symbol('y', positive=True)

conditional_density_Y_given_X_is_04 = (5/(0.6**5)) * (y - 0.4)**4

Integral(conditional_density_Y_given_X_is_04, (y, 0.4, 1)).doit()

The figure below shows the overlaid graphs of the density of \(Y\) and the conditional density of \(Y\) given \(X = 0.4\). You can see that the conditional density is more concentrated on large values of \(Y\), because under the condition \(X = 0.4\) you know that \(Y\) can’t be small.

17.3.4. Using a Conditional Density#

We can use conditional densities to find probabilities and expectations, just as we would use an ordinary density. Here are some examples of calculations. In each case we will set up the integrals and then use SymPy.

The answer is about 60%.

Integral(conditional_density_Y_given_X_is_04, (y, 0.9, 1)).doit()

Now we will use the conditional density to find a conditional expectation. Remember that in our example, given that \(X = 0.4\) the possible values of \(Y\) go from \(0.4\) to 1.

Integral(y*conditional_density_Y_given_X_is_04, (y, 0.4, 1)).doit()

You can condition \(X\) on \(Y\) in the same way. By analogous arguments, for any fixed value of \(y\) the conditional density of \(X\) given \(Y = y\) is

All the examples in this section and the previous one have started with a joint density function that apparently emerged out of nowhere. In the next section, we will study a context in which they arise.