20.1. Maximum Likelihood#

Suppose you have an i.i.d. sample

Assume that

Among all the possible values of the parameter

That maximizing value of the parameter is called the maximum likelihood estimate or MLE for short. In this section we will develop a method for finding MLEs.

Let’s look at an example to illustrate the main idea. Suppose you toss a coin that lands heads with a fixed but unknown probability

Now suppose I propose two estimates of

Between these two, you would pick

Your choice is based on the likelihood of the data under each of the two proposed values of

Of course,

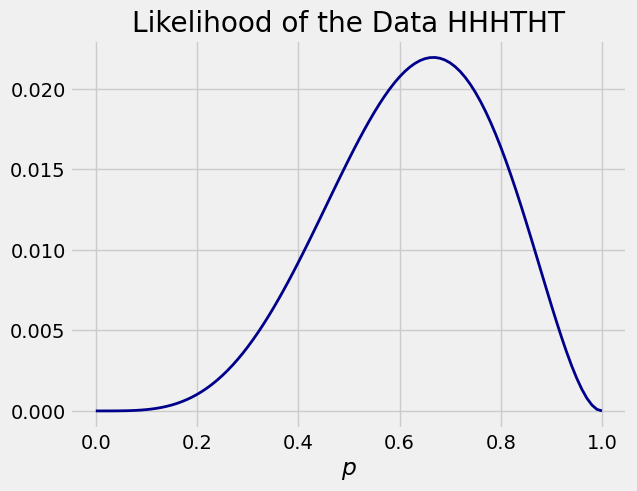

Here is a graph of this function of

You can see that the value of

See More

20.1.1. Maximum Likelihood Estimate of

Let

The random variables are discrete, so the likelihood function is defined as the joint probability mass function evaluated at the sample, as a function of

In our example,

The likelihood depends on the number of 1’s, just as in the binomial probability formula. The combinatorial term is missing because we are observing each element of the sequence.

You’ll soon see the reason for using the strange notation

Notice that the likelihood function depends on the data. Therefore, the value of the function is a random variable. For a general i.i.d. Bernoulli

Likelihood function: Discrete Case

Let

For each

Our goal is to find the value

One way to do this is by calculus. To make the calculus simpler, we recall a crucial observation we have made before:

Taking the

Log-likelihood function

Let

The function

Differentiate the log-likelihood function with respect to

The maximum likelihood estimate (MLE) of

Set the derivative equal to 0 and solve for the MLE

Hence

Therefore the MLE of

That is, the MLE of

Because the MLE

To be very careful, we should check that this calculation yields a maximum and not a minimum, but given the answer you will surely accept that it’s a max. You are welcome to take the second derivative of

See More

20.1.2. MLE of

Let

What if you want to estimate

Likelihood Function: Density Case

In the density case, the likelihood function is defined as the joint density of the sample evaluated at the observed values, considered as a function of the parameter. That’s a bit of a mouthful but it becomes clear once you see the calculation. The joint density in this example is the product of

The quantity

The goal is to find the value of

To do this we will simplify the likelihood function as much as possible.

where

Even in this simplified form, the likelihood function looks difficult to maximize. But as it is a product, we can simplify our calculations still further by taking its log as we did in the binomial example.

The log-likelihood function is

Because

sample = make_array(52.8, 51.1, 54.2, 52.5)

def shifted_log_lik(mu):

return (-1/2) * sum((sample - mu)**2)

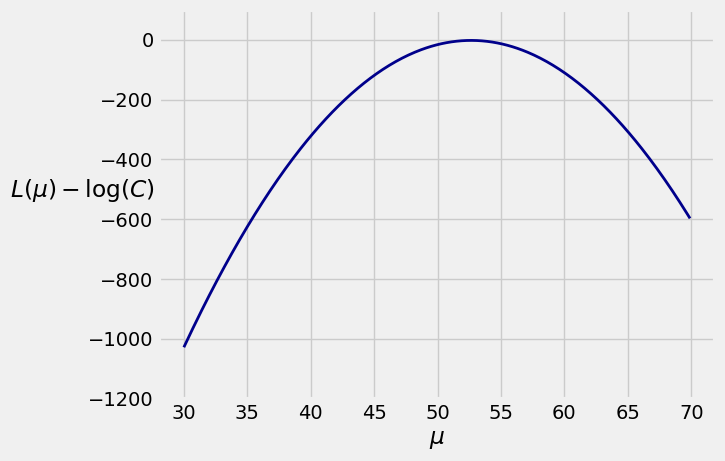

Here is a graph of the function for

The maximizing value of

Find the derivative of

Now set this equal to

Once again we should check that this is a max and not a min, but at this point you will surely be convinced that it is a max.

We have shown that the MLE of

np.mean(sample)

52.650000000000006

You know that the distribution of

20.1.3. Steps for Finding the MLE#

Let’s capture our sequence of steps in an algorithm to find the MLE of a parameter given an i.i.d. sample. See the Computational Notes at the end of this section for other ways of finding the MLE.

Write the likelihood of the sample. The goal is to find the value of the parameter that maximizes this likelihood.

To make the maximization easier, take the log of the likelihood function.

To maximize the log likelihood with respect to the parameter, take its derivative with respect to the parameter.

Set the derivative equal to 0 and solve; the solution is the MLE.

20.1.4. Properties of the MLE#

In the two examples above, the MLE is unbiased and either exactly normal or asymptotically normal. In general, MLEs need not be unbiased, as you will see in an example below. However, under some regularity conditions on the underlying probability distribution or mass function, when the sample is large the MLE is:

consistent, that is, likely to be close to the parameter

roughly normal and almost unbiased

Establishing this is outside the scope of this class, but in exercises you will observe these properties through simulation.

Though there is beautiful theory about the asymptotic variance of the MLE, in practice it can be hard to estimate the variance analytically. This can make it hard to find formulas for confidence intervals. However, you can use the bootstrap to estimate the variance: each bootstrapped sample yields a value of the MLE, and you can construct confidence intervals based on the empirical distribution of the bootstrapped MLEs.

20.1.5. MLEs of

Let

Likelihood Function

We have to think of this as a function of both

where

Log-Likelihood Function

Maximizing the Log Likelihood Function

We will maximize

First fix

Then plug in the maximizing value of

We have already completed the first stage in the first example of this section. For each fixed

So now our job is to find the value

where

Set this equal to 0 and solve for the maximizing value

Again you should check that this yields a maximum and not a minimum, but again given the answer you will surely accept that it’s a max.

You have shown in exercises that

To summarize our result, if

It is a remarkable fact about i.i.d. normal samples that

Computational Notes

The goal is to find the value of the parameter that maximizes the likelihood. Sometimes, you can do that without any calculus, just by observing properties of the likelihood function. See the Exercises.

MLEs can’t always be derived analytically as easily as in our examples. It’s important to keep in mind that maximizing log likelihood functions can often be intractable without a numerical optimization method.

Not all likelihood functions have unique maxima.