11.1. Balance and Detailed Balance#

The Markov chains that we have been studying have stationary distributions that contain much information about the behavior of the chain. The stationary distribution of a chain is the unique probability distribution that solves the balance equations. For some chains it is easy to identify a distribution that solves the balance equations. But for other chains, the solution can be complicated or tedious.

In this section we will see where the term balance comes from, and identify a condition under which it is easy to solve the balance equations.

See More

11.1.1. Balance#

As before, assume that we have an irreducible, aperiodic Markov chain on a finite state space, with transition matrix

To see what is being “balanced” in these equations, imagine a large number of independent replications of this chain. For example, imagine a large number of particles that are moving among the states 1 through 5 according to the transition probabilities of the sticky reflecting walk, and suppose all the particles are moving at instants 1, 2, 3,

Then at any instant and for any state

Let’s check this by looking at the equations again. For each state

For every

The left hand side is the proportion of particles leaving

The generic term in the sum on the right is the proportion of particles that left

When the two sides are equal, the chain is balanced.

11.1.2. Detailed Balance#

The left hand side of the equation above is just the proportion of particles leaving

The detailed balance equations take both directions into account. They are given by

The detailed balance equations say that for every pair of states

In the case

Detailed balance turns out to be a stronger condition than balance.

See More

11.1.3. Detailed Balance Implies Balance#

Suppose there is a probability distribution

From now on, when we say “solution” we will mean a solution that is a probability distribution.

What we learn from this is that if we can find a solution to the detailed balance equations, we will also have solved the balance equations.

This is helpful for two reasons:

The detailed balance equations are simple.

There are lots of them; indeed if there are

Of course all the

But there is an important class of Markov chains for which it is easy to see that the detailed balance equations must have a solution. Therefore for those chains we have an easy way at finding the stationary distribution.

See More

11.1.4. Birth-and-Death Chains#

A birth-and-death chain is a Markov chain on the integers, with one-step transitions restricted to going up by 1, going down by 1, or staying in place. A birth is a move up, and a death is a move down.

Such chains are used to model many different random quantities such as gamblers’ fortunes or population sizes. Look through the chains you have worked with thus far. Many of them, including the sticky reflecting random walk and the Ehrenfest chain, are birth-and-death chains. In the Ehrenfest example, we were modeling the size of the population of gas particles in a container.

For all irreducible birth-and-death chains, the detailed balance equations have a solution. This is because the only possible moves are between consecutive integers, so the only detailed balance equations that have to be solved are

For

Thus for example

This gives us an easy way to write each

We conclude the section with three examples of the use of detailed balance to find stationary distributions.

The first example revisits a simple chain we have studied before.

Next we take another look at the Ehrenfest chain of the previous chapter.

The last example is one where the detailed balance equations don’t have a solution but the chain still has a stationary distribution.

11.1.5. Sticky Reflecting Walk Revisited#

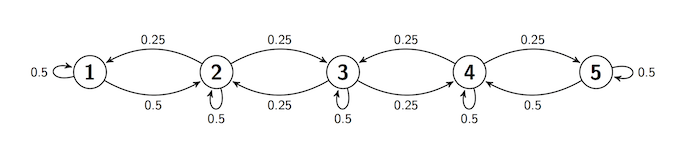

Recall the chain with transition diagram given by

We noted earlier that this is an irreducible, aperiodic chain. We can see now that it is also a birth-and-death chain. So we can solve the detailed balance equations to get the stationary distribution.

Let’s do this starting at the state 1.

So

Now use the fact that the probabilities have to sum to 1, to get

as we have seen earlier numerically and also by solving the balance equations. The method used here is easier than both of those ways.

11.1.6. Ehrenfest Chain Revisited#

We have returned to this example because it is one where solving the balance equations involved some labor. But now we know the chain is a birth-and-death chain, so we can use the detailed balance equations to find the stationary distribution.

Recall the transition rules:

At each step, select one of the

We therefore have an irreducible birth-and-death chain with the transition probabilities we calculated earlier:

The detailed balance equations are easy to solve sequentially starting at the state

and so on, so that for

by a far quicker calculation than the one we did to solve the balance equations.

At this point the calculation is the same as before: the terms have to add up to 1, which leads to

It is worth remembering that for numerical value of steady_state to find the stationary distribution, relying on Python to do all the work for you. This has some clear advantages but also some disadvantages:

Python will not be able to handle the computation when

You will either not see that the distribution is just binomial or will see it and not know why.

These are reasons why, even in the age of powerful personal computers, it is still important to find good ways of solving problems using math.

11.1.7. Sticky Random Walk on a Circle#

Suppose a chain has states 0, 1, 2, 3, 4 arranged in sequence clockwise on a circle. Suppose that at each step the chain stays in place with probability

The chain is irreducible and aperiodic. It is clear that the behavior of the chain is symmetric in the five states, and therefore in the long run it is expected to spend the same proportion of time in each state.

The stationary distribution is therefore uniform on the states. You can also check this by solving the balance equations.

Unlike the chains above, this chain can “loop back around.” It can move from state 4 to state 1. So it’s not a birth-and-death chain, and it’s not clear that the detailed balance equations are consistent.

Let’s try to solve them and see what happens. The detailed balance equations are:

So far so good, but now for the moment of truth:

For this system of equations to be consistent and have a positive solution, the two expressions for

This can only happen if

To summarize:

For all values of

When

When